By Briley Lewis

February 3, 2023

A combination of citizen science and machine learning is a promising new technique for astronomers looking for exoplanets.

Artist's depictions of exoplanets. (Image credit: ESA/Hubble, N. Bartmann)

Many of our imagined sci-fi futures pit humans and machines against each other — but what if they collaborated instead? This may, in fact, be the future of astronomy.

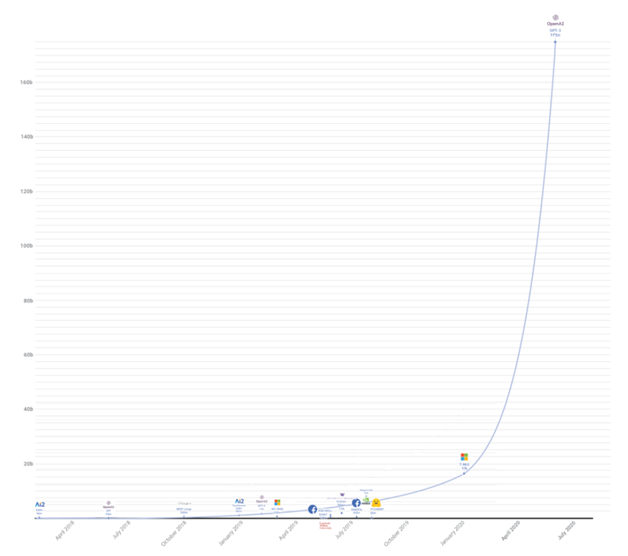

As data sets grow larger and larger, they become more difficult for small teams of researchers to analyze. Scientists often turn to complex machine-learning algorithms, but these can't yet replace human intuition and our brains' superb pattern-recognition skills. However, a combination of the two could be a perfect team. Astronomers recently tested a machine-learning algorithm that used information from citizen-scientist volunteers to identify exoplanets in data from NASA's Transiting Exoplanet Survey Satellite (TESS).

"This work shows the benefits of using machine learning with humans in the loop," Shreshth Malik, a physicist at the University of Oxford in the U.K. and lead author of the publication, told Space.com.

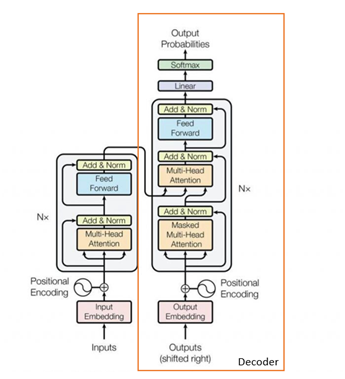

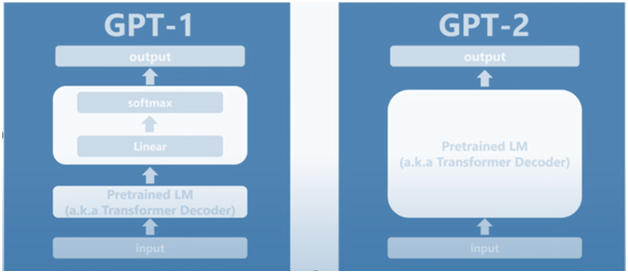

The researchers used a typical machine-learning algorithm known as a convolutional neural network*. This computer algorithm looks at images or other information that humans have labeled correctly (a.k.a "training data"), and learns how to identify important features. After it's been trained, the algorithm can identify these features in new data it hasn't seen before.

For the algorithm to perform accurately, though, it needs a lot of this labeled training data. "It's difficult to get labels on this scale without the help of citizen scientists," Nora Eisner, an astronomer at the Flatiron Institute in New York City and co-author on the study, told Space.com.

People from across the world contributed by searching for and labeling exoplanet transits through the Planet Hunters TESS project on Zooniverse, an online platform for crowd-sourced science. Citizen science has the extra benefit of "sharing the euphoria of discovery with non-scientists, promoting science literacy and public trust in scientific research," Jon Zink, an astronomer at Caltech not affiliated with this new study, told Space.com.

Finding exoplanets is tricky work — they're tiny and faint compared to the massive stars they orbit. In data from telescopes like TESS, astronomers can spot faint dips in a star's light as a planet passes between it and the observatory, known as the transit method.

However, satellites jiggle around in space and stars aren't perfect light bulbs, making transits sometimes tricky to detect. Zink thinks partnerships with machine learning "could significantly improve our ability to detect exoplanets" in this kind of real-world, noisy data.

Some planets are harder to find than others, too. Long-period planets orbit their star less frequently, meaning a longer period of time between dips in the light. TESS only studies each patch of sky for a month at a time, so for these planets may only capture one transit instead of several periodic changes.

"With citizen science, we are particularly good at identifying long-period planets, which are the planets that tend to be missed by automated transit searches," Eisner said.

This work has the potential to go far beyond exoplanets, as machine learning is quickly becoming a popular technique across many aspects of astronomy, Malik said. "I can only see its impact increasing as our datasets and methods become better."

The research was presented at the Machine Learning and the Physical Sciences Workshop at the 36th conference on Neural Information Processing Systems (NeurIPS) in December and is described in a paper posted to the preprint server arXiv.org.

Follow the author at @briles_34 on Twitter. Follow us on Twitter @Spacedotcomand on Facebook.

See: https://www.space.com/exoplanet-dis...-4946-BBF9-FCF99E97E8A4&utm_source=SmartBrief

* Convolutional neural networks are distinguished from other neural networks by their superior performance with image, speech, or audio signal inputs. They have three main types of layers, which are:

Convolutional Layer

The convolutional layer is the core building block of a CNN, and it is where the majority of computation occurs. It requires a few components, which are input data, a filter, and a feature map. Let’s assume that the input will be a color image, which is made up of a matrix of pixels in 3D. This means that the input will have three dimensions—a height, width, and depth—which correspond to RGB in an image. We also have a feature detector, also known as a kernel or a filter, which will move across the receptive fields of the image, checking if the feature is present. This process is known as a convolution.

The feature detector is a two-dimensional (2-D) array of weights, which represents part of the image. While they can vary in size, the filter size is typically a 3x3 matrix; this also determines the size of the receptive field. The filter is then applied to an area of the image, and a dot product is calculated between the input pixels and the filter. This dot product is then fed into an output array. Afterwards, the filter shifts by a stride, repeating the process until the kernel has swept across the entire image. The final output from the series of dot products from the input and the filter is known as a feature map, activation map, or a convolved feature.

After each convolution operation, a CNN applies a Rectified Linear Unit (ReLU) transformation to the feature map, introducing nonlinearity to the model.

As we mentioned earlier, another convolution layer can follow the initial convolution layer. When this happens, the structure of the CNN can become hierarchical as the later layers can see the pixels within the receptive fields of prior layers. As an example, let’s assume that we’re trying to determine if an image contains a bicycle. You can think of the bicycle as a sum of parts. It is comprised of a frame, handlebars, wheels, pedals, et cetera. Each individual part of the bicycle makes up a lower-level pattern in the neural net, and the combination of its parts represents a higher-level pattern, creating a feature hierarchy within the CNN.

Pooling Layer

Pooling layers, also known as downsampling, conducts dimensionality reduction, reducing the number of parameters in the input. Similar to the convolutional layer, the pooling operation sweeps a filter across the entire input, but the difference is that this filter does not have any weights. Instead, the kernel applies an aggregation function to the values within the receptive field, populating the output array. There are two main types of pooling:

Fully-Connected Layer

The name of the full-connected layer aptly describes itself. As mentioned earlier, the pixel values of the input image are not directly connected to the output layer in partially connected layers. However, in the fully-connected layer, each node in the output layer connects directly to a node in the previous layer.

This layer performs the task of classification based on the features extracted through the previous layers and their different filters. While convolutional and pooling layers tend to use ReLu functions, FC layers usually leverage a softmax activation function to classify inputs appropriately, producing a probability from 0 to 1.

See: https://www.ibm.com/topics/convolutional-neural-networks

Our brains process a huge amount of information the second we see an image. Each neuron works in its own receptive field and is connected to other neurons to insure they cover the entire visual field being observed. As each neuron responds to specific stimuli in the restricted region of the visual field called the receptive field in the biological vision system, so CNN processes data only in its receptive field as well. Observed layers are arranged in such a way so that they detect simpler patterns first (lines, curves, etc.) and more complex patterns (faces, objects, etc.) further along. By using a CNN, one can give sight to computers. With this computerised "sight" investigators are now searching for exo-planets.

Hartmann352

February 3, 2023

A combination of citizen science and machine learning is a promising new technique for astronomers looking for exoplanets.

Artist's depictions of exoplanets. (Image credit: ESA/Hubble, N. Bartmann)

Many of our imagined sci-fi futures pit humans and machines against each other — but what if they collaborated instead? This may, in fact, be the future of astronomy.

As data sets grow larger and larger, they become more difficult for small teams of researchers to analyze. Scientists often turn to complex machine-learning algorithms, but these can't yet replace human intuition and our brains' superb pattern-recognition skills. However, a combination of the two could be a perfect team. Astronomers recently tested a machine-learning algorithm that used information from citizen-scientist volunteers to identify exoplanets in data from NASA's Transiting Exoplanet Survey Satellite (TESS).

"This work shows the benefits of using machine learning with humans in the loop," Shreshth Malik, a physicist at the University of Oxford in the U.K. and lead author of the publication, told Space.com.

The researchers used a typical machine-learning algorithm known as a convolutional neural network*. This computer algorithm looks at images or other information that humans have labeled correctly (a.k.a "training data"), and learns how to identify important features. After it's been trained, the algorithm can identify these features in new data it hasn't seen before.

For the algorithm to perform accurately, though, it needs a lot of this labeled training data. "It's difficult to get labels on this scale without the help of citizen scientists," Nora Eisner, an astronomer at the Flatiron Institute in New York City and co-author on the study, told Space.com.

People from across the world contributed by searching for and labeling exoplanet transits through the Planet Hunters TESS project on Zooniverse, an online platform for crowd-sourced science. Citizen science has the extra benefit of "sharing the euphoria of discovery with non-scientists, promoting science literacy and public trust in scientific research," Jon Zink, an astronomer at Caltech not affiliated with this new study, told Space.com.

Finding exoplanets is tricky work — they're tiny and faint compared to the massive stars they orbit. In data from telescopes like TESS, astronomers can spot faint dips in a star's light as a planet passes between it and the observatory, known as the transit method.

However, satellites jiggle around in space and stars aren't perfect light bulbs, making transits sometimes tricky to detect. Zink thinks partnerships with machine learning "could significantly improve our ability to detect exoplanets" in this kind of real-world, noisy data.

Some planets are harder to find than others, too. Long-period planets orbit their star less frequently, meaning a longer period of time between dips in the light. TESS only studies each patch of sky for a month at a time, so for these planets may only capture one transit instead of several periodic changes.

"With citizen science, we are particularly good at identifying long-period planets, which are the planets that tend to be missed by automated transit searches," Eisner said.

This work has the potential to go far beyond exoplanets, as machine learning is quickly becoming a popular technique across many aspects of astronomy, Malik said. "I can only see its impact increasing as our datasets and methods become better."

The research was presented at the Machine Learning and the Physical Sciences Workshop at the 36th conference on Neural Information Processing Systems (NeurIPS) in December and is described in a paper posted to the preprint server arXiv.org.

Follow the author at @briles_34 on Twitter. Follow us on Twitter @Spacedotcomand on Facebook.

See: https://www.space.com/exoplanet-dis...-4946-BBF9-FCF99E97E8A4&utm_source=SmartBrief

* Convolutional neural networks are distinguished from other neural networks by their superior performance with image, speech, or audio signal inputs. They have three main types of layers, which are:

- Convolutional layer

- Pooling layer

- Fully-connected (FC) layer

Convolutional Layer

The convolutional layer is the core building block of a CNN, and it is where the majority of computation occurs. It requires a few components, which are input data, a filter, and a feature map. Let’s assume that the input will be a color image, which is made up of a matrix of pixels in 3D. This means that the input will have three dimensions—a height, width, and depth—which correspond to RGB in an image. We also have a feature detector, also known as a kernel or a filter, which will move across the receptive fields of the image, checking if the feature is present. This process is known as a convolution.

The feature detector is a two-dimensional (2-D) array of weights, which represents part of the image. While they can vary in size, the filter size is typically a 3x3 matrix; this also determines the size of the receptive field. The filter is then applied to an area of the image, and a dot product is calculated between the input pixels and the filter. This dot product is then fed into an output array. Afterwards, the filter shifts by a stride, repeating the process until the kernel has swept across the entire image. The final output from the series of dot products from the input and the filter is known as a feature map, activation map, or a convolved feature.

After each convolution operation, a CNN applies a Rectified Linear Unit (ReLU) transformation to the feature map, introducing nonlinearity to the model.

As we mentioned earlier, another convolution layer can follow the initial convolution layer. When this happens, the structure of the CNN can become hierarchical as the later layers can see the pixels within the receptive fields of prior layers. As an example, let’s assume that we’re trying to determine if an image contains a bicycle. You can think of the bicycle as a sum of parts. It is comprised of a frame, handlebars, wheels, pedals, et cetera. Each individual part of the bicycle makes up a lower-level pattern in the neural net, and the combination of its parts represents a higher-level pattern, creating a feature hierarchy within the CNN.

Pooling Layer

Pooling layers, also known as downsampling, conducts dimensionality reduction, reducing the number of parameters in the input. Similar to the convolutional layer, the pooling operation sweeps a filter across the entire input, but the difference is that this filter does not have any weights. Instead, the kernel applies an aggregation function to the values within the receptive field, populating the output array. There are two main types of pooling:

- Max pooling: As the filter moves across the input, it selects the pixel with the maximum value to send to the output array. As an aside, this approach tends to be used more often compared to average pooling.

- Average pooling: As the filter moves across the input, it calculates the average value within the receptive field to send to the output array.

Fully-Connected Layer

The name of the full-connected layer aptly describes itself. As mentioned earlier, the pixel values of the input image are not directly connected to the output layer in partially connected layers. However, in the fully-connected layer, each node in the output layer connects directly to a node in the previous layer.

This layer performs the task of classification based on the features extracted through the previous layers and their different filters. While convolutional and pooling layers tend to use ReLu functions, FC layers usually leverage a softmax activation function to classify inputs appropriately, producing a probability from 0 to 1.

See: https://www.ibm.com/topics/convolutional-neural-networks

Our brains process a huge amount of information the second we see an image. Each neuron works in its own receptive field and is connected to other neurons to insure they cover the entire visual field being observed. As each neuron responds to specific stimuli in the restricted region of the visual field called the receptive field in the biological vision system, so CNN processes data only in its receptive field as well. Observed layers are arranged in such a way so that they detect simpler patterns first (lines, curves, etc.) and more complex patterns (faces, objects, etc.) further along. By using a CNN, one can give sight to computers. With this computerised "sight" investigators are now searching for exo-planets.

Hartmann352